All You Need to Know About Classification and Regression Models in Machine Learning

The world of Machine Learning (ML) buzzes with terms like classification, regression, and evaluation. But what do these terms really mean? This article hopes to serve as a foundational guide, unpacking these core concepts and equipping you to navigate the exciting field of ML.

Classification: Sorting the Unknown

Classification tasks involve predicting a discrete category for a new data point. Imagine sorting emails as spam or not spam, classifying handwritten digits, or predicting if a customer is likely to churn (cancel their service). Classification algorithms learn from labeled data, where each data point has a pre-assigned category. Here are common classification algorithms:

Logistic Regression: Calculates the probability of a data point belonging to a specific class.

K-Nearest Neighbors (KNN): Classifies a new data point based on the majority class of its nearest neighbors in the training data.

Decision Trees: A tree-like structure where decisions based on features (attributes) lead to a final classification.

Below lies an example demonstrating classification using two popular algorithms: K-Nearest Neighbors (KNN) and Logistic Regression:

import pandas as pd from sklearn.model_selection import train_test_split from sklearn.neighbors import KNeighborsClassifier from sklearn.linear_model import LogisticRegression # Load the data (replace 'iris.csv' with your actual file path) data = pd.read_csv('iris.csv') # Separate features (X) and target variable (y) X = data.drop('species', axis=1) # All columns except 'species' y = data['species'] # Split data into training and testing sets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # K-Nearest Neighbors (KNN) Classification # Create a KNN classifier with k=3 neighbors knn_classifier = KNeighborsClassifier(n_neighbors=3) # Train the KNN model on the training data knn_classifier.fit(X_train, y_train) # Make predictions on the test set knn_predictions = knn_classifier.predict(X_test) # Evaluate KNN model performance (using accuracy metric) from sklearn.metrics import accuracy_score knn_accuracy = accuracy_score(y_test, knn_predictions) print("KNN Accuracy:", knn_accuracy) # Logistic Regression Classification # Create a logistic regression classifier logistic_regressor = LogisticRegression() # Train the logistic regression model on the training data logistic_regressor.fit(X_train, y_train) # Make predictions on the test set logistic_predictions = logistic_regressor.predict(X_test) # Evaluate logistic regression model performance (using accuracy metric) logistic_accuracy = accuracy_score(y_test, logistic_predictions) print("Logistic Regression Accuracy:", logistic_accuracy) # Note: Remember to replace 'iris.csv' with your actual CSV file path containing labeled data for classification.This code performs the following steps:

Load the data: Reads a CSV file containing features and a target variable (e.g., 'iris.csv' for the Iris flower dataset).

Separate features and target: Splits the data into features (X) and the target variable (y) representing the class labels.

Train-test split: Divides the data into training and testing sets for model training and evaluation.

KNN Classification:

Creates a KNN classifier with a chosen number of neighbors (k=3 in this case).

Trains the KNN model on the training data.

Makes predictions on the test set using the trained model.

Evaluates the KNN model's performance using accuracy score.

Logistic Regression Classification:

Creates a logistic regression classifier.

Trains the logistic regression model on the training data.

Makes predictions on the test set using the trained model.

Evaluates the logistic regression model's performance using accuracy score.

This example demonstrates how to train and evaluate two different classification models. You can experiment with different algorithms and hyperparameter settings to find the best model for your specific classification task. Remember to replace 'iris.csv' with your actual dataset path and ensure it contains labeled data suitable for classification.

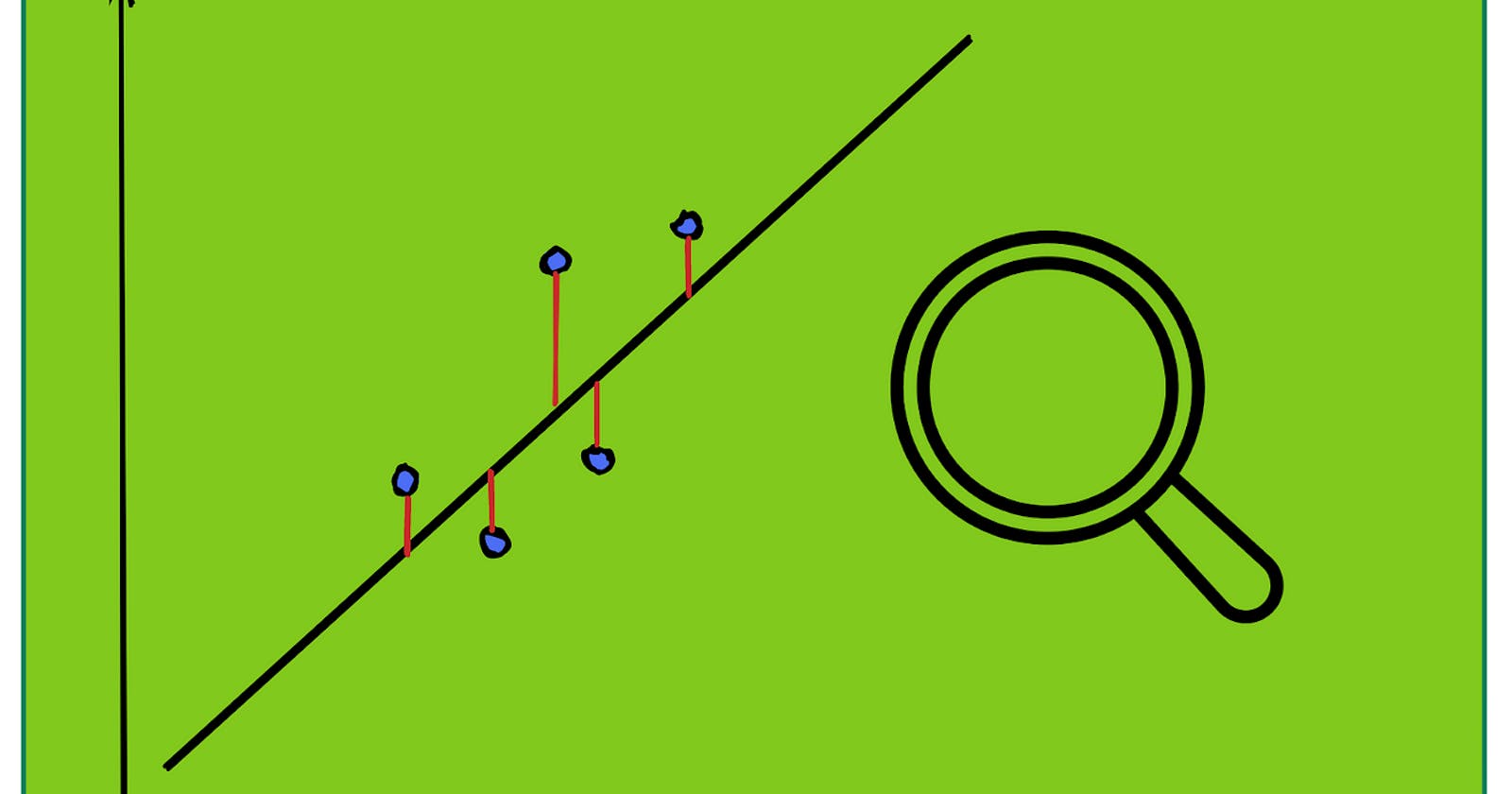

Regression: Predicting Continuous Values

Regression tasks focus on predicting a continuous value for a new data point. This could be forecasting house prices based on size and location, predicting stock prices, or estimating customer lifetime value. Regression algorithms learn the relationship between input features and a continuous target variable. Here are common regression algorithms:

Linear Regression: Models the relationship between features and a target variable using a linear equation.

Support Vector Regression (SVR): Finds a hyperplane that best separates data points while minimizing the margin of error.

Random Forest Regression: An ensemble method that combines multiple decision trees for improved prediction accuracy.

An example demonstrating regression using two popular algorithms: Linear Regression and Support Vector Regression (SVR) lies below:

import pandas as pd from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.svm import SVR # Load the data (replace 'california_housing.csv' with your actual file path) data = pd.read_csv('california_housing.csv') # Select features (X) and target variable (y) # Choose appropriate features based on your problem X = data[['median_income', 'total_rooms']] # Example features y = data['median_house_value'] # Target variable (e.g., median house price) # Split data into training and testing sets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Linear Regression # Create a linear regression model linear_regressor = LinearRegression() # Train the linear regression model on the training data linear_regressor.fit(X_train, y_train) # Make predictions on the test set linear_predictions = linear_regressor.predict(X_test) # Evaluate linear regression model performance (using Mean Squared Error) from sklearn.metrics import mean_squared_error linear_mse = mean_squared_error(y_test, linear_predictions) print("Linear Regression Mean Squared Error:", linear_mse) # Support Vector Regression (SVR) # Create an SVR model with linear kernel (adjust kernel if needed) svr_regressor = SVR(kernel='linear') # Train the SVR model on the training data svr_regressor.fit(X_train, y_train) # Make predictions on the test set svr_predictions = svr_regressor.predict(X_test) # Evaluate SVR model performance (using Mean Squared Error) svr_mse = mean_squared_error(y_test, svr_predictions) print("SVR Mean Squared Error:", svr_mse) # Note: Remember to replace 'california_housing.csv' with your actual CSV file path containing relevant features and a numerical target variable for regression.This code performs the following steps:

Load the data: Reads a CSV file containing features and a target variable (e.g., 'california_housing.csv' for a housing price prediction task).

Separate features and target: Splits the data into features (X) and the target variable (y) representing the continuous value to be predicted.

Train-test split: Divides the data into training and testing sets for model training and evaluation.

Linear Regression:

Creates a linear regression model.

Trains the linear regression model on the training data.

Makes predictions on the test set using the trained model.

Evaluates the linear regression model's performance using Mean Squared Error (MSE).

Support Vector Regression (SVR):

Creates an SVR model with a chosen kernel (linear kernel in this case).

Trains the SVR model on the training data.

Makes predictions on the test set using the trained model.

Evaluates the SVR model's performance using Mean Squared Error (MSE).

This example demonstrates how to train and evaluate two different regression models. You can experiment with different algorithms and hyperparameter settings to find the best model for your specific prediction task. Remember to replace 'california_housing.csv' with your actual dataset path and ensure it contains relevant features and a numerical target variable suitable for regression

Model Evaluation: Measuring Success

Building a machine learning model is just one step. Evaluating its performance is crucial for gauging its effectiveness and identifying areas for improvement. Different metrics are used for classification and regression tasks:

Classification Evaluation:

Accuracy: The percentage of correctly classified data points.

Precision: The proportion of true positives among predicted positives (avoiding false positives).

Recall: The proportion of true positives identified out of all actual positives (avoiding false negatives).

F1-Score: A harmonic mean of precision and recall, balancing both metrics.

Regression Evaluation:

Mean Squared Error (MSE): The average squared difference between predicted and actual values. Lower MSE indicates better fit.

Root Mean Squared Error (RMSE): The square root of MSE, representing the average prediction error in the same units as the target variable.

R-Squared: Represents the proportion of variance in the target variable explained by the model. Higher R-Squared indicates a better fit.

It is to be noted that choosing the right evaluation metric depends on the specific problem and its goals.

In Conclusion,classification, regression, and model evaluation are fundamental building blocks of machine learning. By understanding these concepts, you can embark on your own machine learning journey, building models that solve real-world problems. As you delve deeper, explore topics like model selection, feature engineering and hyperparameter tuning to refine your skills and empower your machine learning projects.